Java is one of the longest standing and widely deployed enterprise programming languages in the world. It is also one of the most frequently attacked platforms due to its many and well documented security vulnerabilities, many of which have very high Common Vulnerabilities and Exposures (CVE) scores. This problem is amplified by the fact that countless data center applications are still running on older, legacy versions of the platform. Although the original promise of Java was application portability, in reality most core enterprise applications were written for execution on a specific version of Java, and that’s where they’ve stayed.

Vulnerability Mitigation Road Blocks

The two primary reasons that legacy Java security risks persist are cost of mitigation and operational impacts. Mitigating legacy Java vulnerabilities typically requires updating the Java runtime virtual machine which hosts the application. This process is costly since it requires extensive application testing and re-qualification. Meanwhile, if application modifications are required the price tag grows even higher. Often, this type of financial investment is hard to justify based on ambiguous estimates of the security risk and exploitability associated with a vulnerability.

Operational risk is at least as big a barrier to mitigation as cost, if not more so. Enterprise IT organizations value application availability above all else since their job performance is often heavily measured on this metric. Making any modification to a production application introduces risk and the threat of downtime. No matter how much testing is done, it’s impossible to guarantee that changes to the application will not break it. While most modifications are innocuous, the perceived risk still exists.

Unfortunately, political risks typically outweigh concerns over vulnerabilities, and legacy applications are often just left alone. Given these obstacles, is it reasonable to expect that the Security team will be the change agent for securing legacy Java applications? Not necessarily. That’s because Security may not even be aware of the risk –unless they have dedicated AppSec resources. Even if the Security team raises a concern, without hard data on the threat they won’t be in a position to insist that mitigating a vulnerability justifies the risk of modifying a production application.

As a work around, the Security team may try to address the risk using network based tools, but these are typically unable to protect against sophisticated attacks, or ones that are launched internally. Static or dynamic code analysis tools may be in use for application security, but these cannot mitigate attacks that exploit issues lower in the stack, at the Java runtime level. To make matters worse, these usually produce reams of information on potential vulnerabilities, which take a long time to review, prioritize, and remediate. In summary, addressing the risk from legacy Java falls through the cracks in typical enterprise environments.

Alternative Measures

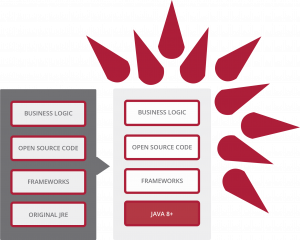

Fortunately a new approach has emerged for mitigating the legacy Java risk: Java containerization. Containerization involves wrapping an application and all required ancillary software in a single, portable package. While not a new concept, containerization is enjoying renewed popularity thanks to technologies such as Docker, which are well suited to cloud deployment.

In the Java security context, technologies that wrap a Java application and the underlying legacy Java platform within an up-to-date runtime environment exist today. Using this method, external threats are intercepted by the new, more secure Java Virtual Machine (JVM), instead of having direct access to the older version. Furthermore, since the application is still executing in the original, legacy Java environment, this model does not require modifications to existing code. This eliminates the risk to application stability associated with upgrading the JVM, while still protecting it from exploits.

Meanwhile, Java containerization provides additional security benefits beyond running legacy Java within a modern Java runtime. For example, security policies can be implemented within the (modern) Java virtual machine. Because the implementation is within the JVM, the policies can be very granular and application aware. They can prevent unauthorized access to specific areas of the file system, or block the use of certain Java capabilities that are not used by the application but are often exploited by hackers, such as Reflection API calls or process forking. Policies can even scrub input data for attempts to exploit poor input validation, like SQL Injection attacks, using a process known as “taint tracking”.

By placing policy enforcement within the JVM, the security function can be closely aligned with expected application behavior, while performance impact is minimized. This enables organizations to securely deploy legacy Java applications indefinitely since containerization provides a virtual patch against known and unknown vulnerabilities, without the need to make any code changes.